How Pipelines Work

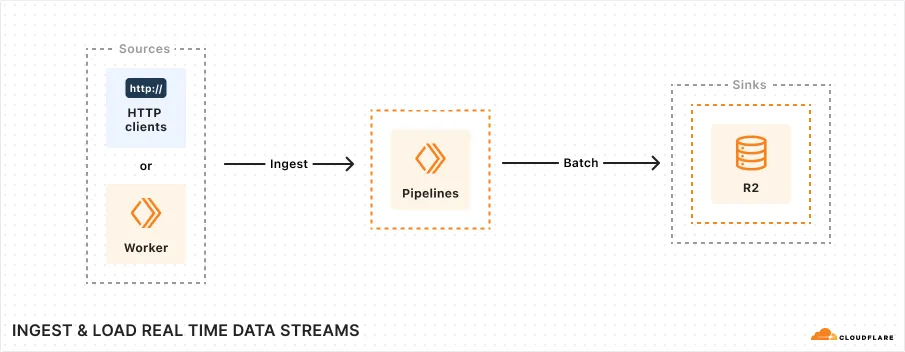

Cloudflare Pipelines let you ingest data from a source, and deliver to a sink. It's built for high volume, real time data streams. Each pipeline can ingest up to 100 MB/s of data, via HTTP or a Worker, and load the data as files in an R2 bucket.

This guide explains how a pipeline works.

Pipelines supports the following sources:

- HTTP Clients, with optional authentication and CORS settings

- Cloudflare Worker, using the Pipelines Workers API

Multiple sources can be active on a single pipeline simultaneously. For example, you can create a pipeline which accepts data from both a Worker, and via HTTP. Multiple workers can be configured to send data to the same pipeline. There is no limit to the number of source clients.

Pipelines can ingest JSON serializable records.

Pipelines supports delivering data into R2 Object Storage. Ingested data is delivered as newline delimited JSON files (ndjson), with optional compression. Multiple pipelines can be configured to deliver data to the same R2 bucket.

Pipelines are designed to be reliable. Data sent to a pipeline should be delivered successfully to the configured R2 bucket, provided that the R2 API credentials associated with a pipeline remain valid.

Each pipeline maintains a storage buffer. Requests to send data to a pipeline receive a successful response only after the data is committed to this storage buffer.

Ingested data accumulates, until a sufficiently large batch of data has been filled. Once the batch reaches its target size, the entire batch of data is converted to a file and delivered to R2.

Transient failures, such as network connectivity issues, are automatically retried.

However, if the R2 API credentials associated with a pipeline expire or are revoked, data delivery will fail. In this scenario, some data might continue to accumulate in the buffers, but the pipeline will eventually start rejecting requests.

Pipelines update without dropping records. Updating an existing pipeline effectively creates a new instance of the pipeline. Requests are gracefully re-routed to the new instance. The old instance continues to write data into your configured sink. Once the old instance is fully drained, it is spun down.

This means that updates might take a few minutes to go into effect. For example, if you update a pipeline's sink, previously ingested data might continue to be delivered into the old sink.

If you send too much data, the pipeline will communicate backpressure by returning a 429 response to HTTP requests, or throwing an error if using the Workers API. Refer to the limits to learn how much volume a single pipeline can support. You might see 429 responses if you are sending too many requests, or sending too much data.

If you are consistently seeing backpressure from your pipeline, consider the following strategies:

- Increase the shard count, to increase the maxiumum throughput of your pipeline.

- Send data to a second pipeline if you receive an error. You can setup multiple pipelines to write to the same R2 bucket.